type

status

date

slug

summary

tags

category

icon

password

Random Variable (RV)

set of possible values "range"

- Also called support

"distribution function" R^2 (how "likely" something is to happen)

\int p(x)dx=1

\int^{0.75}_{0.25}2dx=1

continuous probability density function(pdf)

discrete probability mass function(pmf)

- continuous unbounded

- Normal

- MLE(maximum likelihood estimation):

- discrete bounded

- Bernoulli. binomial, categorical( p(x)=p_1, if~x=i, multinomial

- continuous bounded

- uniform

Naive Bayes

class | continuous(Normal) | binary(Bernoulli) | Categorical |

1 | 0.5 | 0 | 1 |

1 | 2.5 | 1 | 2 |

2 | 4 | 0 | 3 |

3 | 2 | 1 | 2 |

4 | 1 | 0 | 1 |

1 | 2 | 1 | 3 |

2 | 7 | 1 | 2 |

Input: 1. 2. distribution for each feature

- fit categorical to class labels

- for each class:

for each data dimension j:

fit class conditional MLE for dim j

ㅤ | 1 | 2 | 3 |

1 | u=5/3, \sigma = | p=2/3 | \lambda_1 =1/3, \lambda_2 =1/3, \lambda_3 =1/3 |

2 | ㅤ | ㅤ | ㅤ |

3 | ㅤ | ㅤ | ㅤ |

4 | ㅤ | ㅤ | ㅤ |

Naive Bayes Inference

- Input

- features

- Output

- a predicted label inferred

- Terms

Reasons to care Independence

- independence is too restrictive

- No independence exponential space and sparse

- Too much data needed

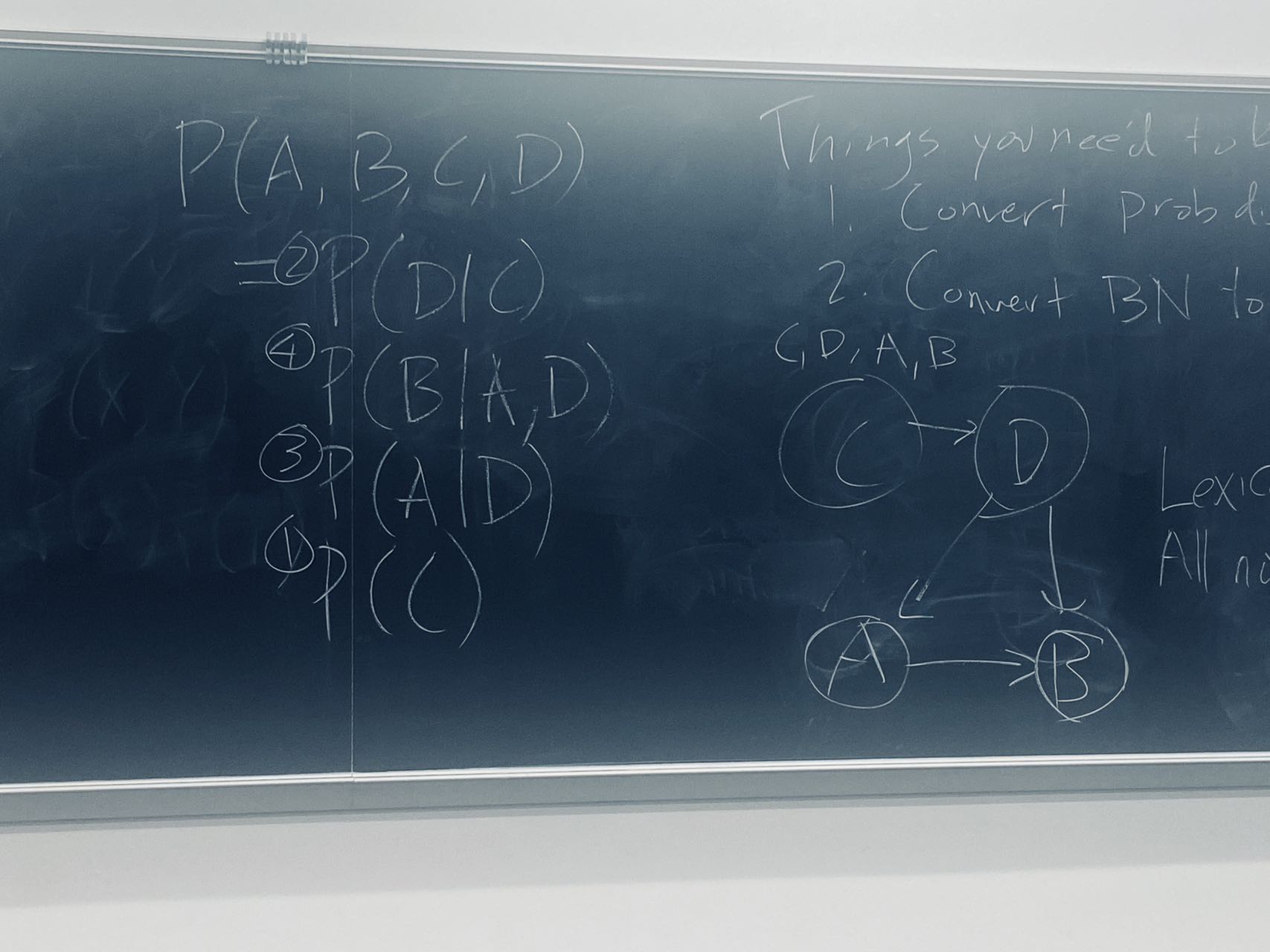

Chain rule of probability

Bayes net inference

- Input:

- set of variables that are observed evidence

- set of variables that we want know the distribution of it query

- Output: probability distribution over query variables given evidence

Naive Bayes net learning

- Input:

- set of nodes(RVS)

- Output:

- set of edges(probability distributions)

Bayes net learning

- Input:

- Data x

- Output:

- Estimated Probability dist. for each node(RV)

Check conditional independence relationships

- Input: two sets of variables to check set of evidence

Things need to known:

- Convert prob dist. in writing to BN

- Convert BN to prob. dist.

E.g.

Lexicographic:

- All nodes named before parents

Unsupervised learning

- Clustering Input; data x

- Output: a label for each data point

- (cluster membership)

Herniating optimization for clustering

- Guess cluster assignment

- Repeat until converged

- Update membership to minimize energy

- update cluster identities to minimize energy

K-means

Two sticky issues with k- means

- How to initialize?

- How to pick K?

Supervised Learning

- Linear regression ——regression loss=SSE

- Naive Bayes —— classification , loss = data labeled