type

status

date

slug

summary

tags

category

icon

password

Computer Vision

CNN Architectures

- Dense Layers (used as classifier)

- Convolution Layer (used as feature ectraction layer)

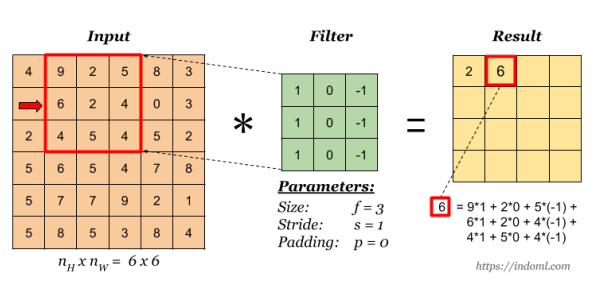

- Convolution operation

- Activation function

- Pooling

- used to reduce the size of the input

- summarizes the information for a particular part of the image

- Multi-Channel Convolution Operation

AlexNet - Two Tower Design

- Convolution Neural Network based architecture

- One of the first architecture to demonstrate the potential of CNNs on image-related tasks like object recognition and detection

VGG

- Increased the model depth and reduced the total number of weights

Receptive field

- Two 3*3 convolution operations is same as one 5*5 convolution operation

Advantages of decreasing the receptive field

- Increased number of non-linear rectification layers (1 vs 3 ReLU layers)

- Makes decision function more discriminative

- Reduces number of trainable parameters

- C: number of channels in input and output

- 7*7 receptive field:

- 3*3 receptive fields:

Sparsifying Network

Challenge

- Modern architectures are very inefficient for sparse data structures

- Even though number of operations will decrease, overhead of lookups will increase

Solution

- Translation invariance can be achieved using convolution blocks

- We need to find the optimal local construction and repeat it spatially

Problem with Naïve Module

- Problem: Pooling layers do not reduce the channel dimension

- Solution: Use 1X1 convolutions

ResNet

Residual Learning

- Let H be the mapping, hidden layer wants to learn

- 𝑦 = 𝐻 (𝑥)

- Instead of learning a direct mapping, hidden layer can learn the residual mapping of y with respect to x

- 𝐹 (𝑥) = 𝐻 (𝑥) − 𝑥

- Can be reformulated to 𝐹 (𝑥) + 𝑥 = 𝐻 (x)

- Where 𝐹 (𝑥) is the new mapping hidden layer will learn

- Above formulation is easier to optimize and in extreme case 𝐹 (𝑥) can be trained to zero to learn identity mapping

- Very difficult to learn directly