type

status

date

slug

summary

tags

category

icon

password

HPC Introduction

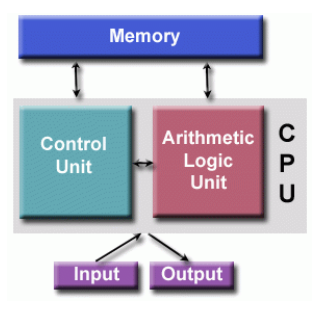

Von Neumann Computer Architecture

Flynn's Classical Taxonom

General Parallel Computing Terminology

- Node

- A standalone "computer in a box." Usually comprised of multiple CPUs/processors/cores, memory, network interfaces, etc. Nodes are networked together to comprise a supercomputer

- Task

- A logically discrete section of computational work. A task is typically a program or program-like set of instructions that is executed by a processor. A parallel program consists of multiple tasks running on multiple processors

- Pipelining

- Breaking a task into steps performed by different processor units, with inputs streaming through, much like an assembly line; a type of parallel computing

- Shared Memory

- Describes a computer architecture where all processors have direct access to common physical memory. In a programming sense, it describes a model where parallel tasks all have the same "picture" of memory and can directly address and access the same logical memory locations regardless of where the physical memory actually exists

- Symmetric Multi-Processor (SMP)

- Shared memory hardware architecture where multiple processors share a single address space and have equal access to all resources - memory, disk, etc.

- Distributed Memory

- In hardware, refers to network-based memory access for physical memory that is not common. As a programming model, tasks can only logically "see" local machine memory and must use communications to access memory on other machines where other tasks are executing

- Communications

- Parallel tasks typically need to exchange data. There are several ways this can be accomplished, such as through a shared memory bus or over a networ

- Synchronization

- The coordination of parallel tasks in real time, very often associated with communications

- Synchronization usually involves waiting by at least one task, and can therefore cause a parallel application's wall clock execution time to increase.

- Computational Granularity

- Coarse: relatively large amounts of computational work are done between communication events

- Fine: relatively small amounts of computational work are done between communication events

- Observed Speedup

- Observed speedup of a code which has been parallelized, defined as

- (wall-clock time of serial execution)/(wall-clock time of parallel execution)

- Parallel Overhead

- Required execution time that is unique to parallel tasks, as opposed to that for doing useful work. Include factors such as: Task start-up/termination time; Synchronizations & communication; Software overhead imposed by parallel languages, libraries, operating system, etc.

- Embarrassingly (IDEALY) Parallel – Solving many similar, but independent tasks simultaneously; little to no need for coordination between the tasks

- Scalability

- Refers to a parallel system's (hardware and/or software) ability to demonstrate a proportionate increase in parallel speedup with the addition of more resources. Factors that contribute to scalability include:

- Hardware: particularly memory/cpu bandwidths and network communication properties

- Application algorithm; Parallel overhead related; Characteristics of your specific application

Amdahl’s Law

- Speedup = 1 / (1 – P)

- If number of processors is increased

- Speedup= \frac{1}{\frac{p}{N}+S}

- P = parallel fraction

- N = number of processors

- S = serial fraction

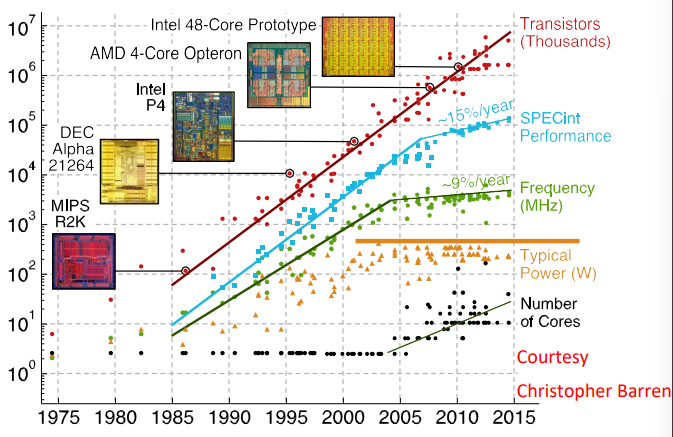

Moore’s Law

- The number of transistors in a dense integrated circuit (IC) doubles about every two years

Scalability

- Strong scaling

- The total problem size stays fixed as more processors are added

- Goal is to run the same problem size faster

- Perfect scaling means problem is solved in 1/P time (compared to serial)

- Weak scaling (Gustafson)

- The problem size per processor stays fixed as more processors are added. The total problem size is proportional to the number of processors used.

- Goal is to run larger problem in same amount of time

- Perfect scaling means problem Px runs in same time as single processor run

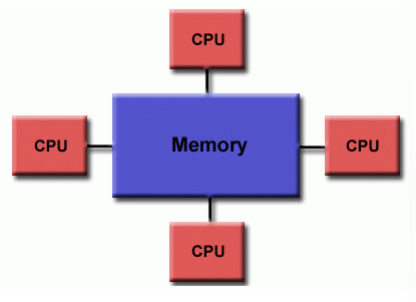

Shared Memory

- Ability for all processors to access all memory as global address space

- Multiple processors can operate independently but share the same memory resources

- Changes in a memory location effected by one processor are visible to all other processors

- Classified as Uniform Memory Access (UMA) and Non-Uniform Memory Access (NUMA), based upon memory access times.

Uniform Memory Access (UMA)

- Identical processors

- Equal access and access times to memory

- Sometimes called CC-UMA - Cache Coherent UMA

- Cache coherent means if one processor updates a location in shared memory, all the other processors know about the update

- Cache coherency is accomplished at the hardware leve

Non-Uniform Memory Access (NUMA)

- Often made by physically linking two or more SMPs

- One SMP can directly access memory of another SMP

- Not all processors have equal access time to all memories

- Memory access across link is slower

- If cache coherency is maintained, then may also be called CC-NUMA - Cache Coherent NUMA

Shared Memory: Advantages and Disadvantages

- Advantages

- user-friendly programming

- Data sharing between tasks is both fast and uniform

- Disadvantages

- lack of scalability

- Programmer responsibility for synchronization constructs

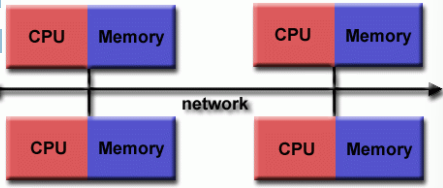

Distributed Memory

- Requires a communication network to connect inter-processor memory

- Processors have their own local memory

- Memory addresses in one processor do not map to another processor

- No concept of global address space across all processors

- No concept of cache coherency

Distributed Memory: Advantages and Disadvantages

- Advantages

- scalable

- each processor can rapidly access its own memory

- Cost effectiveness: can use commodity, off-the-shelf processors and networking

- Disadvantages

- The programmer is responsible for many of the details

- May be difficult to map existing data structures

- Non-uniform memory access times

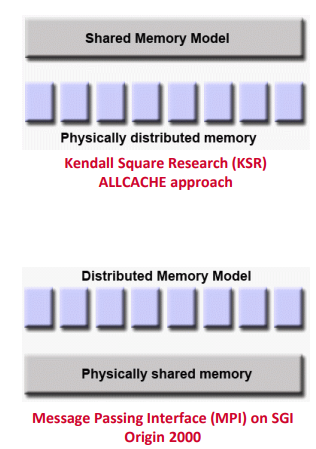

Hybrid Distributed-Shared Memory

- Advantages and Disadvantages

- Whatever is common to both shared and distributed memory architectures

- Increased scalability is an important advantage

- Increased programmer complexity is an important disadvantage

Parallel Programming Models

Synchronous vs. Asynchronous Communications

- Synchronous communications require some type of "handshaking" between tasks

- Synchronous communications are often referred to as blocking communications

- Asynchronous communications are often referred to as non-blocking communications

Scope of Communications

- Point-to-point

- involves two tasks with one task acting as the sender/producer of data, and the other acting as the receiver/consumer.

- Collective

- involves data sharing between more than two tasks, which are often specified as being members in a common group, or collective.

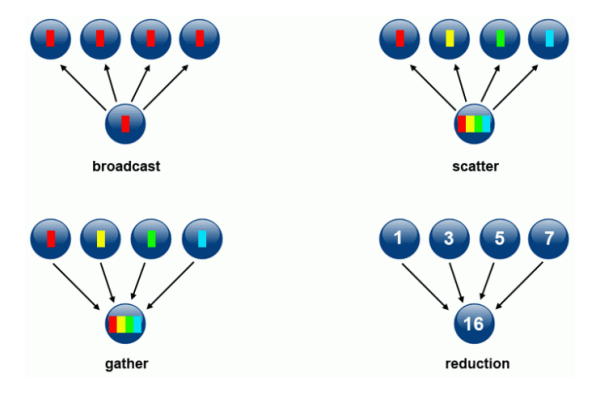

Types of Collective Communication

Synchronization

- Types of Synchronization

- Barrier

- When the last task reaches the barrier, all tasks are synchronized.

- Lock/Semaphore

- The first task to acquire the lock "sets" it

- This task can then safely (serially) access the protected data or code.

- Other tasks can attempt to acquire the lock but must wait until the task that owns the lock releases it.

- Synchronous communication operations

- For example, before a task can perform a send operation, it must first receive an acknowledgment from the receiving task that it is OK to send

“Best Practices” for I/O

Rule #1: Reduce overall I/O as much as possible.

- I/O operations are generally regarded as inhibitors to parallelism.

- I/O operations require orders of magnitude more time than memory operations.