type

status

date

slug

summary

tags

category

icon

password

High Performance Deep-learning(CSE 5449)

Intro

History

- ImageNet

- AlexNet

Definitions

- Machine Learning

- Ability of machines to learn without being programmed

- Supervised Learning

- We provide the machine with the “right answers”

- Classification – Discrete value output (e.g. email is spam or not-spam)

- Regression – Continuous output values (e.g. house prices)

- Unsupervised Learning

- No “right answers” given. Learn yourself; no labels for you!

- Clustering - Group the data points that are ”close” to each other (e.g. cocktail party problem) • finding structure in data is the key here!

DNN Training

- Backward Pass

- update gradient

Essential Concepts: Activation function and Back-propagation

- Back-propagation

- involves complicated mathematics.

- Luckily, most DL Frameworks give you a one line implementation --

model.backward()

- Activation functions

- Introducing Non-linearity to the function

- Non-linearities allow the network to approximate complex, non-linear functions.

- RELU (a Max function.) is the most common activation function.

- Sigmoid, Tanh, ReLU, Leaky ReLU

Perimeters vs. Hyperparameters

Parameters

- Estimated during the training with historical data

- is part of the model

- the estimated value is saved with the trained model

- Dependent on the dataset that the system is trained with

Hyperparameter

- Values are set beforehand

- External to the model.

- Not a part of the trained model and hence the values are not saved.

- Independent of the dataset

Stochastic Gradient Descent (SGD)

- Goal of SGD:

- Minimize a cost function

- j(\theta) as a function of \theta

- SGD is iterative

- Only two equations to remember:

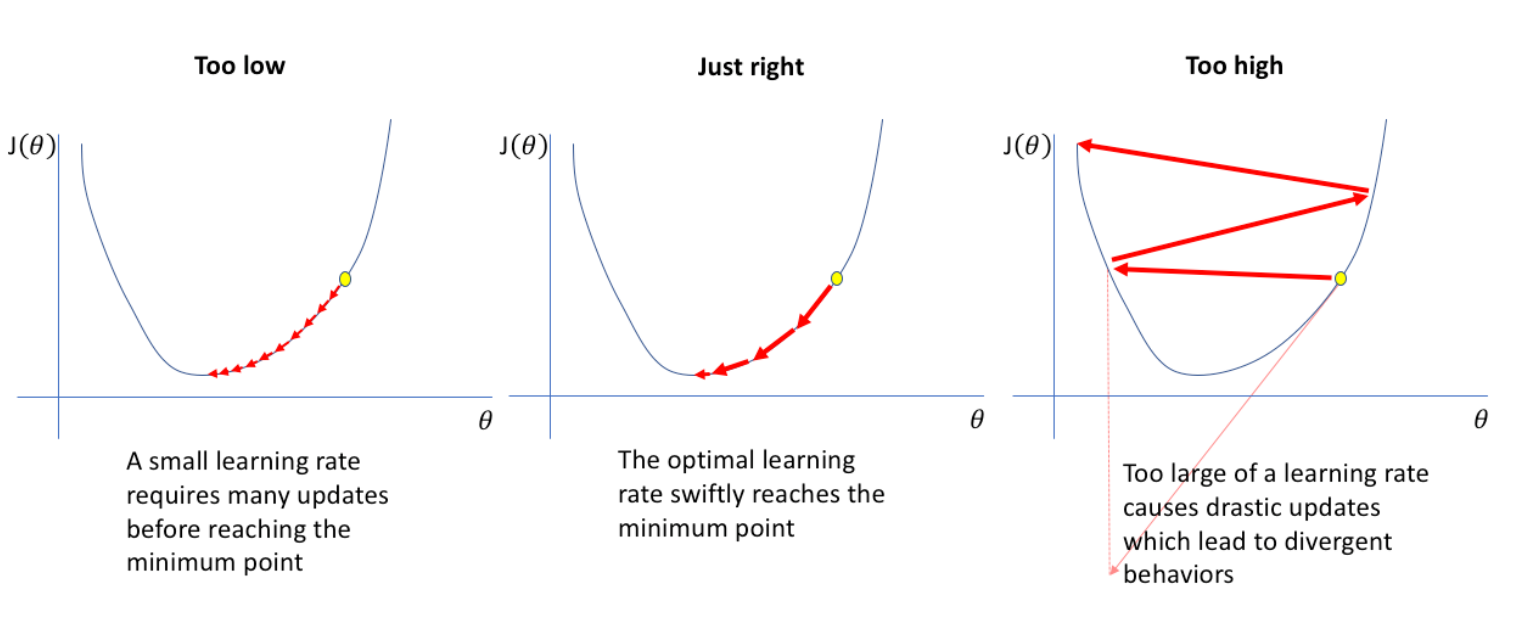

- Learning rate

Learning Rate(\alpha)

Batch Size

- Batch Gradient Descent - Batch Size = N

- In each iteration, the gradient of the loss function is computed using the entire training dataset

- Weights are updated once per pass over the entire dataset

- Since all samples are used, the direction of the gradient is typically stable, but the computation can be very slow, especially with large datasets

- Stochastic Gradient Descent – Batch Size = 1

- In each iteration, a single randomly chosen sample from the training dataset is used to compute the gradient of the loss function.

- Weight updates can be very frequent, with an update occurring for every individual sample.

- As only one sample is used at a time, the direction of the gradient can be noisy and have high variance, but each iteration is computationally fast.

- Mini-batch Gradient Descent – Somewhere in the middle – Common

- Batch Size = 64, 128, 256, etc.

- Finding the optimal batch size will yield the fastest learning.

Model Size

- Model Size: # of parameters (weights on edges)

- Model Size: # of layers (model depth

Accuracy and Throughput (Speed)

- accuracy of the trained model on “new” data is the metric of success

- In Computer Vision: images/second is the metric of throughput/speed

Impact of Model Size and Dataset Size

- Large models → better accuracy

- More data → better accuracy

- Single-node Training; good for

- Small model and small dataset

- Distributed Training; good for

- Large models and large datasets

Overfitting and Underfitting

- Overfitting – model > data

- so model is not learning but memorizing your data

- Underfitting – data > model

- so model is not learning because it cannot capture the complexity of your data

How to Deal with Overfitting

- Regularization

- L1 and L2

- Dropout

- Data Augmentation

- Early stopping

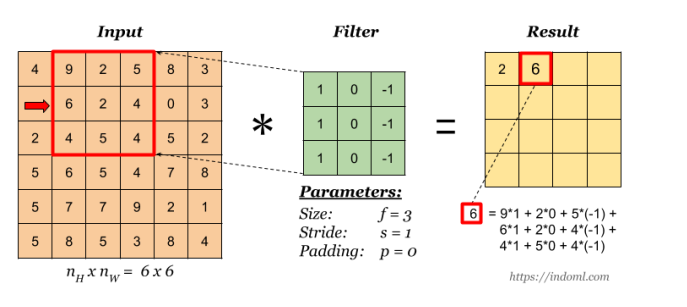

Convolution Operation

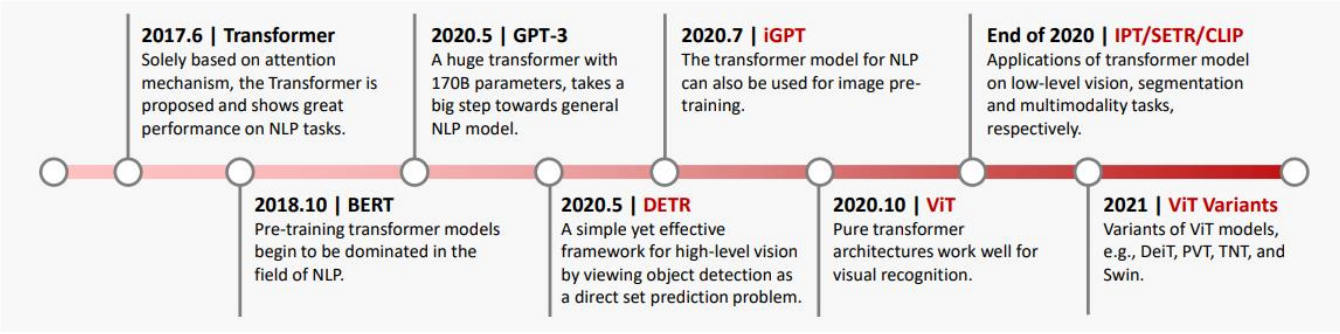

Transformer Models

- RNN

- Has a short reference window

- Attention Mechanism

- Has an infinite reference window

- Encoder

- Output is a continuous vector representation of the inputs

- Decoder

- Feed previous outputs into the decoder recurrently until

<end>is generated

- Input Embedding

- Each word map to a vector

- Positional Encoding

- word vector + positional encoding = positional input embeddings

- Every odd time stamp, use cos function; every even time stamp, use sin function

- They have linear property

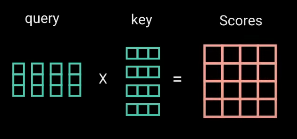

- Multi headed attention

- Self-Attention

- Feed into three fully connected layer

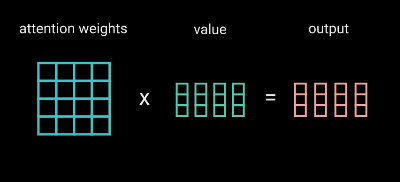

- Create query, key, value vectors

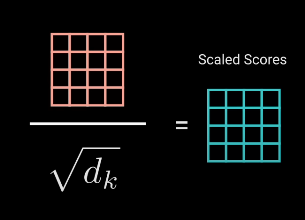

- The higher the scores, the higher the focus

- To allow more stable gradients

- Softmax —— let the model to be more confident on witch word to attend to

- Then feed the output into a linear layer to process

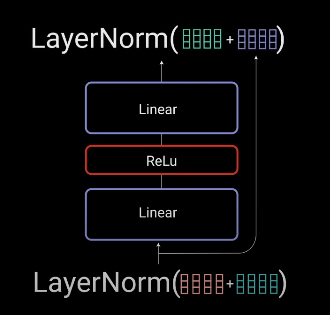

- Residual Connection, Layer Normalization & Pointwise Feed Forward

Each encoder can learn different representation

- Output Embedding & Positional Encoding

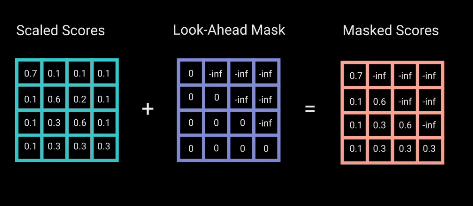

- Decoder Multi-Headed Attention

- Look-Ahead Mask

- SoftMax —— probability between 0 and 1

- Each decoder can take different attention from encoder