type

status

date

slug

summary

tags

category

icon

password

Introduction to Distributed Deep Neural Network Training – Data Parallelism

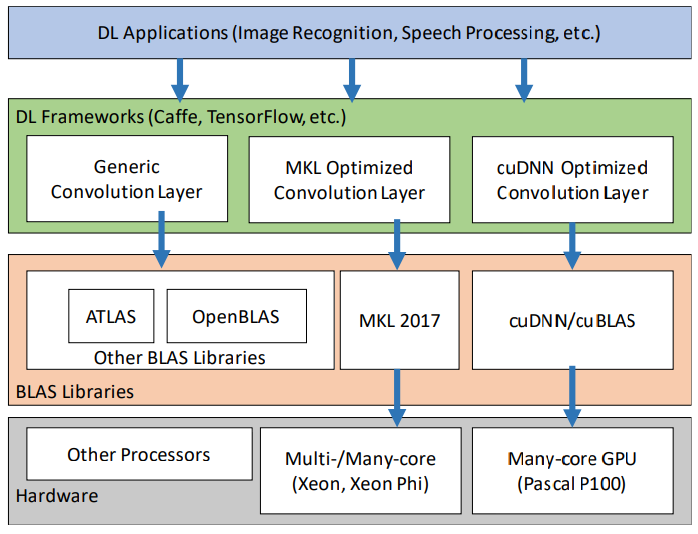

- BLAS Libraries - the heart of math operations

- Atlas/OpenBLAS

- NVIDIA cuBlas

- Intel Math Kernel Library (MKL)

- Most compute intensive layers are generally optimized for a specific hardware

- E.g. Convolution Layer, Pooling Layer, etc.

- DNN Libraries - the heart of Convolutions!

- NVIDIA cuDNN (already reached its 8th iteration – cudnn-v8)

- Intel MKL-DNN – a promising development for CPU-based ML/DL training

Parallelization Strategies

- Data Parallelism or Model Parallelism

- Hybrid Parallelism

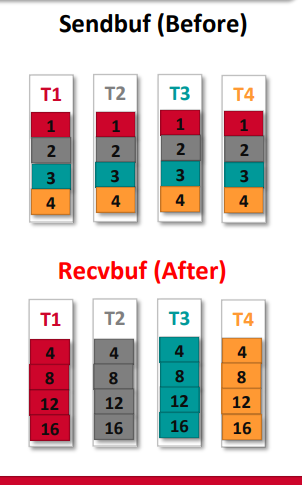

Allreduce Collective

- Element-wise Sum data from all processes and sends to all processes

Data Parallelism - AllReduce

- Gradient Aggregation

- Call MPI_Allreduce to reduce the local gradients

- Update parameters locally using global gradients